User Overview

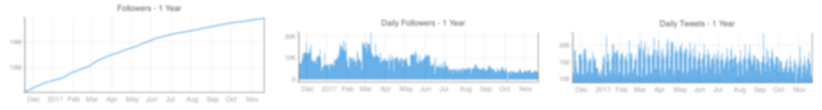

Followers and Following

Followers

Following

Tweet Stats

Analysed 0 tweets, tweets from the last 0.0 days.

Tweets Day of Week (UTC)

Tweets Hour of Day (UTC)

Key:

Tweets

Retweets

Quotes

Replies

Tweets Day and Hour Heatmap (UTC)

| Top Applications | Count | % |

|---|

| Top Tweet Types | Count | % |

|---|

| Top Content Types | Count | % |

|---|

Tweets

No tweets found